Guided Investigation

⚠️ Preliminary Notes

These materials are part of the investigative process and are still under review. They were collected to structure reasoning and guide the research, not as a finished product. Please treat the content as provisional and subject to revision.

On April 22-23, 2025, I had a chance to discuss the Green Layer project with Zakir Durumeric and Kimberly Ruth, respectively, who kindly provided some guidance about how to investigate further the topic of Internet networking from a sustainability perspective. Below are the questions they recommended that I explore, along with the answers I have found to date. I may amend this page as new insights come into light.

Attribution: Zakir Durumeric (ZD), Kimberly Ruth (KR), and Thibaud Clement (TC).

Understanding Network Infrastructure and Energy Consumption¶

What does the current topology of Internet network infrastructure look like? (ZD)¶

As a reminder, from an Internet carbon footprint accounting perspective, and although methodology greatly varies by study1234, research literature generally converges towards the idea that the main sources of emissions in the ICT sector are data centers, networks, and user devices.

Specifically, the networks category generally refers to the data transmission infrastructure–everything that lies between data centers and end-users. The main actors in this category include physical telecom network operators, as well as Internet Service Providers (ISPs) and content distribution systems.

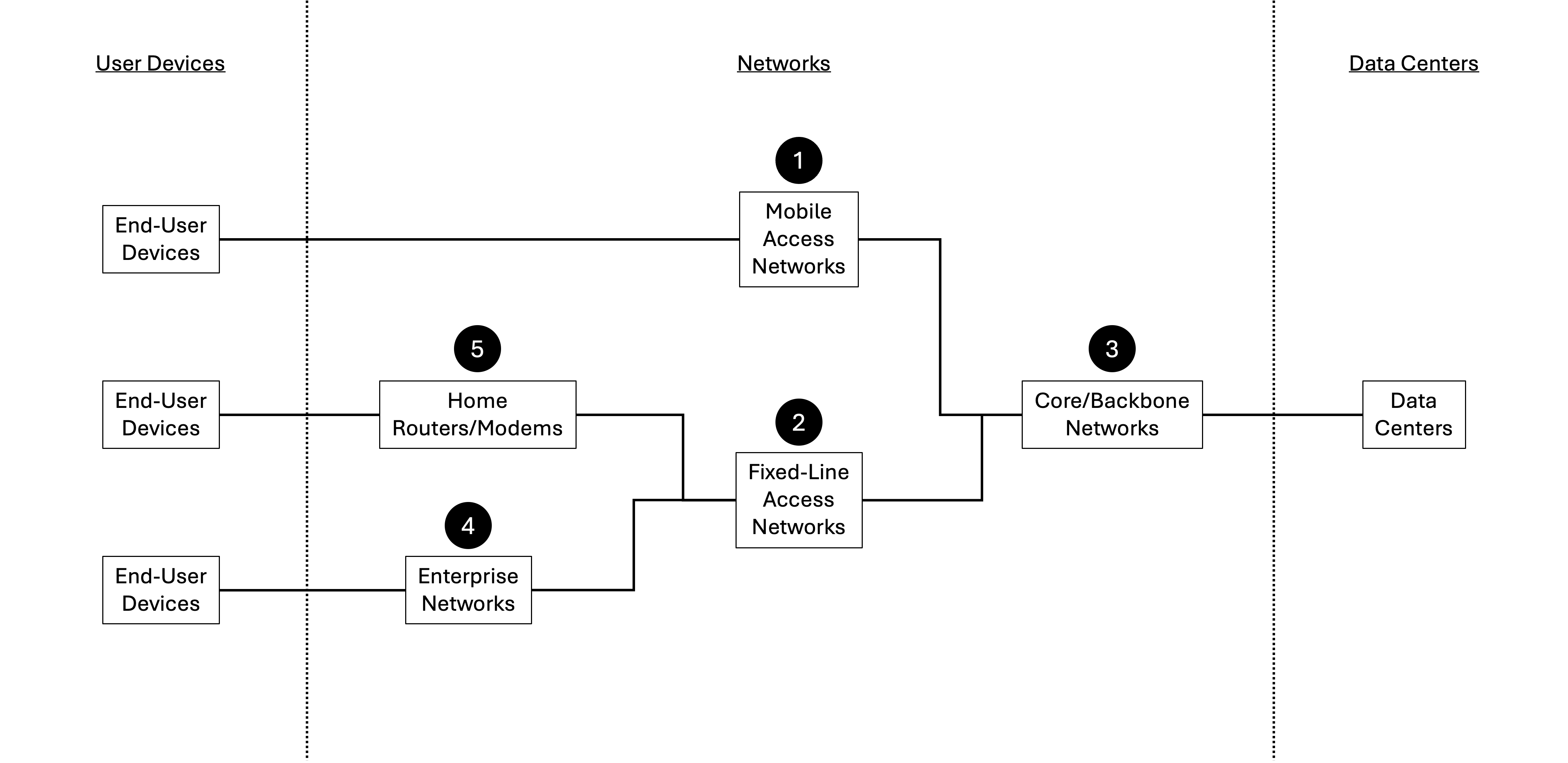

We may break down the networks category into the following segments for finer analysis:

- Mobile Access Networks: Telecom operators such as AT&T, Verizon, Vodafone, Orange, and T-Mobile operate cellular networks consisting of base stations, cell towers, antenna systems, radio transceivers, backhaul links, and associated site cooling and power equipment. These mobile networks provide wireless connectivity directly to users' mobile devices, enabling access to the broader Internet.

- Fixed-Line Access Networks: Telecom operators such as AT&T, Deutsche Telekom, Orange, and Vodafone manage equipment in central offices—including DSL Access Multiplexers (DSLAMs), Cable Modem Termination Systems (CMTS), Optical Line Terminals (OLTs), aggregation switches, and routers—and local distribution nodes such as fiber splitters, distribution frames, and copper/fiber patch panels. These "last-mile" or "access" networks aggregate and directly connect residential and business customers to the wider Internet infrastructure.

- Core/Backbone Networks: Tier 1 ISPs, backbone providers, and major telecom operators such as Level 3 (Lumen), NTT, and Cogent Communications operate core/backbone networks composed of high-capacity routers and switches, optical transport systems (e.g., Dense Wavelength Division Multiplexing (DWDM) equipment), Internet Exchange Points (IXPs), submarine cables, landing stations, and carrier-neutral data centers. These networks function as the "highways" of the Internet, providing critical connectivity and capacity for data transmission across cities, regions, countries, and continents.

- Enterprise Networks: Businesses, universities, and government institutions privately own and operate enterprise local-area networks (LANs) on their premises (corporate buildings, offices, campuses, and commercial facilities). These networks include Ethernet switches, Wi-Fi access points, routers, firewalls, network controllers, and security appliances. The primary role of enterprise networks is to provide internal connectivity, secure access to enterprise resources, and efficient Internet access for employees and enterprise devices.

- Home Routers/Modems: End-users, such as consumers and small businesses, operate home routers and modems—collectively known as Customer Premises Equipment (CPE)—on-site (homes, apartments, offices, stores). Typical equipment includes broadband modems (DSL, cable modems, fiber Optical Network Terminals (ONTs)), residential Wi-Fi routers, mesh Wi-Fi systems, and integrated modem/router home gateways. Home routers/modems represent the final ("edge") link of the Internet's infrastructure, managing local connectivity and user access to broader telecom networks.

Figure 1 illustrates how these five network segments interconnect to form the broader Internet infrastructure.

Figure 1 — The Internet’s Network Infrastructure: Integration of Mobile, Fixed, Core, Enterprise, and Home Networks.

Figure 1 — The Internet’s Network Infrastructure: Integration of Mobile, Fixed, Core, Enterprise, and Home Networks.

Which segments of Internet networks consume the most power? (ZD, KR)¶

Reliable and up-to-date data on the energy consumption of Internet network segments is limited. Obtaining a comprehensive snapshot for analysis thus involves integrating multiple sources, extrapolating figures across different years, and working with ranges rather than precise data points.

The following methodology was applied to estimate global energy use across each segment of Internet networks for the year 2022:

- Mobile Access Networks: According to the International Energy Agency (IEA)5, global data transmission networks consumed approximately 260–360 TWh in 2022, excluding both enterprise LAN networks and home routers/modems. Mobile networks represented around two-thirds (~67%) of this total, corresponding to an estimated 174–241 TWh.

- Fixed-Line Access Networks: The remaining third (~33%) of the energy consumption (approximately 86–120 TWh) pertains to fixed networks, which include both fixed-line access networks and core/backbone networks. While the IEA does not explicitly distinguish between these two sub-segments, a comprehensive national-level telecom study by Finland's Transport and Communications Agency (Traficom, 2022)6 observed a roughly 50/50 split between fixed-line access and core/backbone networks. Assuming this ratio is generally representative, fixed-line access networks consumed approximately 43–60 TWh globally in 2022.

- Core/Backbone Networks: Following the above assumption (Traficom, 2022)6, core/backbone networks likewise consumed approximately 43–60 TWh globally in 2022.

- Enterprise Networks: Specific data on the energy consumption of enterprise Local Area Networks (LANs) seems unavailable at this time. Malmodin & Lundén (2018)7 report that global energy consumption for data centers and enterprise networks combined was about 245 TWh in 2015. Applying the IEA's 2022 data ratio (~52% networks / ~48% data centers)8 yields an estimate of around 127 TWh attributable specifically to enterprise networks. Within this enterprise networks category, it is conservatively estimated (based on typical industry heuristics) that enterprise LAN equipment represents approximately 10–15% of total consumption. This results in approximately 13–19 TWh for enterprise LAN networks in 2015. Extrapolating this estimate forward to 2022, using a conservative annual growth rate of 5–7% (below the historically observed 10–12% growth reported by Van Heddeghem et al. (2014)9, to account for improved energy efficiencies), enterprise LAN networks are estimated to have consumed 18–31 TWh globally in 2022.

- Home Routers/Modems: ABI Research10 estimates that consumer CPE consumed around 105 TWh globally in 2023, projecting a compound annual growth rate (CAGR) of 4.6% between 2023 and 2030. Assuming a similar growth rate between 2022 and 2023, the estimated global energy consumption for home routers and modems in 2022 was approximately 100 TWh.

Table 1 summarizes these estimated energy consumption values and the relative share of each network segment within the broader ICT network infrastructure for the year 2022:

| Network Segment | 2022 Global Energy Use (TWh/year) | % of Total Network Energy |

|---|---|---|

| Mobile Access Networks (cell sites, RAN) |

~174-241 TWh | ~48% |

| Home Routers/Modems (CPE for consumers) |

~100 TWh | ~23% |

| Fixed-Line Access Networks (DSL, cable, fiber nodes) |

~43–60 TWh | ~12% |

| Core/Backbone Networks (core routers, switches, long-haul transport) |

~43–60 TWh | ~12% |

| Enterprise Networks (enterprise LAN switches, Wi-Fi) |

~18-31 TWh | ~6% |

| Total | ~378-492 TWh | 100% |

Table 1 — Estimated Global Energy Consumption Breakdown for ICT Network Segments (2022).

Which specific pieces of network equipment (switches, core routers, edge routers, etc.) consume the most power? (ZD, KR)¶

In Internet infrastructure, access network equipment and core routers are typically the largest power consumers. Both ends of the network–the ubiquitous access devices and the few large core nodes–are key targets for energy reduction. For example:

- In cellular networks, about 80% of the energy is consumed by the base station sites (radio towers and associated equipment)11.

- In fixed broadband, customer-premises optical network units (ONUs) or cable modems, plus the aggregation switches and broadband network gateways, draw significant power since they are deployed in huge numbers and often run 24/712.

- On the core side, high-capacity core routers are individually very power-hungry–a single carrier-grade router chassis can consume on the order of tens of kilowatts. For instance, a Cisco CRS-1 core router (16-slot) was rated around 13.2 kW, and newer 16-slot core routers (400 Gbps per slot) can draw up to 18 kW13. These core routers handle massive traffic volumes, so they contribute a large share of network energy especially as traffic grows. By contrast, metro/edge routers and switches (e.g. provider edge routers, data center switches) tend to consume less individually, but are still significant in aggregate.

How does energy consumption vary based on network equipment utilization (e.g., idle vs. peak load)? (ZD)¶

Most networking equipment today exhibits poor energy proportionality–meaning it consumes almost as much power at idle (low traffic) as it does at high utilization. Studies have shown that for routers, the power draw in idle mode is nearly the same as under heavy load14. In one example, the difference in a router’s power consumption between busy and idle state was very small, indicating that fixed overhead components (like always-on circuits, cooling fans, etc.) dominate its energy use.

This contrasts with an ideal “energy-proportional” device that would scale power consumption linearly with traffic. In practice, a large router might consume 90% of its peak power even when handling minimal traffic. The same is true for switches and base stations–they tend to have a high static power draw. The result is that energy-per-bit worsens at low loads (since watts stay nearly constant while throughput drops).

Some modern equipment is improving in this regard (through techniques like dynamic link shutdown or adaptive frequency scaling), but generally the variation is modest. Idle power remains a major factor–for example, an enterprise switch might draw only slightly less power with no ports active versus all ports active

Because of this, strategies to put unused network components into sleep modes are important: if an idle device can be powered down or put in a low-energy state, the network’s overall consumption can drop significantly researchgate.net

Which network operations (routing, caching, packet forwarding, DNS resolution, encryption, etc.) consume the most energy, and where do inefficiencies like packet loss or wasted CPU cycles typically occur? (ZD, KR)¶

The bulk of energy in network operations is spent on the core function of moving packets–i.e., forwarding and transporting data. In a router or switch, the data plane forwarding (handling packets through forwarding engines and switch fabrics) is the most energy-intensive operation, supported by power-hungry ASICs and high-speed transceivers.

For example, a fully loaded core router expends on the order of 10 nJ per bit just to process IP packets through its forwarding engine and fabric15.

The forwarding engine, power supply, and cooling within the router contribute around 65 percent to its total power consumption.

This means that high-throughput tasks like packet forwarding and routing consume significant power. By contrast, control-plane operations (routing table computations, DNS lookups, etc.) run on general-purpose CPUs and account for only a tiny fraction of energy (the control processor is usually a small portion of the power budget).

Other operations like encryption/decryption (e.g. for VPNs or HTTPS) can add overhead if done in software, but in high-performance networks these are often offloaded to specialized hardware engines to keep up with line rate.

Network transport operations also consume energy: for wired networks, optical/electrical signal conversion and amplification use power on each link; for wireless, radio transmission is very energy-intensive (power amplifiers at base stations, for instance). Thus, long-haul transmissions and wireless access are energy-costly operations–though per bit, fiber optic transport is relatively efficient, the absolute power of optical lasers and amplifiers along a path adds up.

Caching and content distribution operations involve data storage and I/O; while storing data (e.g. in CDN servers) uses energy, retrieving data repeatedly from distant servers would consume even more via network transit, so caching is usually net beneficial energy-wise.

Key inefficiencies arise in any wasted work or idle running.

- Packet loss is one inefficiency: when packets are dropped (due to congestion or errors) and later retransmitted, the network expends energy forwarding the same data multiple times with no useful result on the first attempt. High loss or error rates (e.g., on a poor wireless link) can significantly inflate the energy per successful bit delivered because of these retries.

- Similarly, protocol overhead and unnecessary traffic (keep-alive messages, redundant transfers) consume energy carrying bytes that aren’t application payload – although per packet overhead is small, at Internet scale it adds up.

- Another inefficiency is underutilization: as noted, devices consume near-constant power regardless of load, so a router handling only 5% of its capacity is wasting energy potential – the unused capacity doesn’t “turn off” its power draw. This leads to inefficient energy use when networks are lightly loaded. Also, powering the cooling and power supply systems for network gear incurs overhead (in a core router, power supply and cooling can be ~30% of the total power). If those systems run full tilt even for low traffic, that’s inefficiency.

How do the networking energy profiles within data centers compare to those in broader Internet infrastructure (such as ISPs or telecom networks)? (ZD)¶

Networking equipment typically accounts for a smaller portion of a data center's total energy consumption:

- A study by Lawrence Berkeley National Laboratory16 indicates that network equipment consumed approximately 3% of U.S. data center electricity, with servers and infrastructure being the primary energy consumers:

Networking electricity use remains flat around 3% from 2014 to 2022, then begins to grow in 2023 with the introduction of InfiniBand switch units.

- The network inside a data center (Top-of-Rack switches, aggregation switches, etc.) is usually highly utilized and optimized for performance, with short physical distances and efficient Ethernet switches. In a Top-of-Rack (ToR) architecture17, switches are placed at the top of each server rack, connecting directly to the servers within that rack.

- These ToR switches then connect to aggregation or end-of-row switches. The physical distances between these components are relatively short, often limited to the dimensions of the server racks and the data center layout. This proximity allows for high-speed, low-latency connections using short-length cables.

- Data center switches are designed for high energy efficiency, often consuming less power per unit of data transmitted compared to broader network equipment. For example, a Juniper QFX5120 (32×100GbE switch) draws about 515 W when fully loaded with 3.2 Tbps throughput, which equates to roughly 0.16 W/Gbps, which represents roughly a 70× gain in energy-per-bit (from advances in silicon, packaging, and network design) compared to a high-end Cisco Catalyst switch circa 2007, which drew about 11 W/Gbps of switching capacity at full load.

For instance, some high-performance switches can achieve energy efficiencies as low as 0.2 Watts per Gbps. - Moreover, data center networks operate in a controlled environment, often with modern hardware, so their power usage effectiveness is optimized. Some data centers implement heat reuse strategies to improve overall energy efficiency. For example, the Equinix PA10 data center in Paris captures waste heat from its servers and repurposes it to heat the nearby Olympic Aquatics Center18.

In contrast, for ISPs and telecom operators, the network is the product, and nearly all operational energy goes into running network infrastructure across vast areas:

- Telecom networks, including those operated by ISPs, are significant energy consumers. The telecom industry accounts for approximately 2-3% of global power consumption19. This energy is primarily used to power network infrastructure spread across vast areas, including base stations, routers, and transmission equipment.

- Telecom network equipment is often housed in outdoor cabinets20 or smaller exchanges, where traditional data center cooling methods are impractical. These setups commonly use passive cooling techniques, such as natural ventilation or fan-assisted systems, to manage heat. While effective, these methods may be less efficient than the advanced cooling systems found in centralized data centers.

- Telecom networks are typically engineered to handle peak traffic loads, leading to overprovisioning21. Demand typically peaks in the evening hours when users are active, and drops to a low in the middle of the night. For instance, regulators define peak usage as ~7–11 pm local time for fixed broadband, when heavy streaming and online activity cause the highest network utilization.22 Conversely, the late-night/early-morning hours see the lowest throughput. During off-peak hours, much of this capacity remains underutilized, resulting in energy inefficiencies. This continuous operation, regardless of actual demand, contributes to unnecessary energy consumption.

In terms of power efficiency, how do telecom grid routers compare with other networking equipment (e.g., ISP routers, data center switches)? (ZD)¶

Here is how we may compare networking equipments in terms of power efficiency:

- Telecom-grade core routers (the kind used in carrier backbones) are engineered for maximum throughput and reliability, and historically they were not very energy-efficient per bit–but this has improved in recent generations.

- In raw terms, a high-end telecom router might handle several terabits per second (Tbps) of traffic while drawing a few kilowatts of power. This can be quantified as a ratio: older core routers (circa 2010) delivered on the order of 40 Gbps per 1 kW (i.e., ~25 W/Gbps), whereas newer models improved this by an order of magnitude.

- For example, the Cisco CRS-X core router (16 slots, up to 6.4 Tbps total) has a power draw around 18 kW, which works out to roughly 2.8 W per Gbps of capacity in the chassis. This is a significant efficiency gain over earlier telecom routers (the previous CRS-1 was ~13.2 kW for 640 Gbps, ~20 W/Gbps).

- Also, telecom routers include redundant power supplies, extensive control-plane hardware, and ASICs designed for maximum throughput under all conditions, which can add to base power consumption.

- By comparison, data center switches are often even more efficient on a per-bit basis, since they are optimized for shorter distances and have fewer heavy-duty processing requirements. A modern top-of-rack switch might support 400 Gbps and consume ~200 W, which is 0.5 W/Gbps – substantially better than the core router. However, the core router is doing more complex tasks (like BGP routing, deep buffering, handling many 100 Gbps ports, etc.), which can justify higher per-bit energy.

- ISP edge routers and enterprise routers usually fall somewhere in between: they handle moderate traffic and have some efficiency measures, but when not fully utilized their watts/Gbps is worse.

- In contrast, a simple Ethernet switch (like those in LANs) can be quite efficient per bit when operating near capacity, but if only a few ports are active its relative efficiency drops.

Measurement, Metrics, and Methodologies¶

How are sustainability metrics currently defined and measured in networking? What standards, methodologies, or frameworks exist? (KR)¶

The industry and research community have developed several metrics and frameworks to quantify sustainability in networks.

- A fundamental measure is simply energy consumption (power usage in Watts or energy in kWh) of network equipment.

- Building on that, energy efficiency metrics express work done per unit energy, such as throughput per Watt or its inverse, energy per bit (Joules per bit). For example, an equipment vendor might report a switch’s capacity in Gbps and its power draw to give a figure like “Joules per GB” transferred.

- There’s also interest in the carbon footprint of network operations—essentially translating energy use into CO₂ emissions based on the energy source’s carbon intensity.

Standards bodies have created specific metrics:

- The ETSI (European Telecom Standards Institute) defined an Equipment Energy Efficiency Ratio (EEER) for transport telecom equipment, which basically measures the ratio of throughput to power under standard conditions23.

- The ITU-T has recommendations (e.g., L.131024) for how to measure energy efficiency of telecom gear, specifying test methodologies (idle, full load tests, etc.) and metrics like Watts per port or per Gb/s.

- The IETF is working on green networking metrics25. In a 2024 draft, they emphasize the need for instrumentation to report metrics such as power consumption, energy efficiency (bits per Joule), and carbon footprint of a network or service.

- The idea is to have standardized counters in routers and switches that network management systems can poll, to assess energy use in real time. The IETF draft also discusses metrics for specific scopes (device-level, flow-level, and network-wide).

- For example, at the device level: instantaneous power draw (W), energy consumed over time (Joules), and utilization. At the flow level: energy per flow or per bit for a given traffic flow through the network. And network-wide: overall energy per bit delivered, or carbon emissions per bit.

- They even introduce a concept of “Sustainability Tax (ST)”–a factor to adjust raw power metrics based on how green the power source is, thus reflecting “true” carbon impact rather than just energy.

- Another widely used metric in data centers (which can apply to network facilities) is PUE (Power Usage Effectiveness) – although PUE mainly gauges facility overhead (total facility power / IT equipment power), network gear’s efficiency improvements would show up as reduced IT power for a given workload.

- For networking specifically, researchers26 sometimes use energy intensity metrics like kWh per gigabyte of data transferred, measured over large aggregates.

- This can be done top-down (total network energy divided by total traffic) or bottom-up (summing all components).

- However, such metrics vary widely depending on what’s included (access devices vs. user devices).

- Therefore, frameworks define system boundaries clearly – e.g., one might measure the energy per bit of just the network infrastructure (excluding end-user devices).

In practice:

- Operators track metrics like energy per subscriber or energy per site for mobile networks, and CO₂ per terabyte delivered for broadband.

- Sustainability reporting often follows the GHG Protocol scopes: the direct fuel usage (e.g., backup generators) as Scope 1, purchased electricity for network operations as Scope 2 (usually the biggest part for telecoms), and upstream/downstream (like manufacturing of devices or customer device usage) as Scope 3. If we want to focus on operational emissions, the key metrics come down to measuring electricity consumption of network nodes and then converting that to carbon emissions (using emission factors).

- There are also benchmarking methodologies: for example, the ATIS Telecom Energy Efficiency Ratio (TEER)27 in the US was proposed to rate network equipment energy per bit under standard loads.

- Frameworks like the ITU’s Sustainable ICT indicators or the GSMA’s mobile network energy efficiency benchmarking provide methodologies to compare operators. These include metrics such as energy per mobile data subscriber or per cell site, etc.

- We also see Normalized metrics – e.g., energy per user-hour of internet usage – in some studies to gauge improvements over time.

What specific processes and activities drive operational emissions associated with Internet networking? (KR)¶

The operational emissions of Internet networking are almost entirely driven by one thing: electricity consumption of the network’s hardware during use.

When we talk about operational (use-phase) emissions, we mean the CO₂ emitted by power plants to supply the electricity that runs routers, switches, optical amplifiers, cellular base stations, data center network equipment, and so on.

So the primary process is simply powering the network infrastructure. This includes: keeping the port interfaces and transceivers energized, the switching silicon forwarding packets, the control processors running, and supporting systems like cooling fans, air conditioning, and power supply units.

Breaking it down, the specific operational activities include:

- Transmitting and receiving data: Every time data traverses a network link, the equipment at both ends (and possibly amplifiers or repeaters in between) consumes energy to send or boost that signal. For fiber optic links, lasers and photodetectors consume power; for copper, line drivers; for wireless, radio transmitters and receivers (and the signal processing for modulation) consume power. So the continuous activity of carrying traffic is a direct energy driver.

- Packet processing and routing: Routers and switches perform work on each packet (looking up routes, updating headers, etc. in hardware). The faster the line rates, the more power the chips consume to handle packets in real time. High throughput engines might use TCAMs, ASICs, etc., which all draw power to push bits through. So operationally, a busy router draws more power (to a point) because its forwarding engines are actively switching packets (though, as noted, not a huge delta from idle).

- Supporting electronics: Each device has overhead functions always running – e.g., a router’s control plane (CPU, memory) managing protocols, the management interfaces, the clocking and synchronization circuits, etc. These ensure the network’s operation (like routing updates, OSPF/BGP processes, DNS resolution in a DNS server, authentication services in network access, encryption handshakes for VPNs). While individually these processes don’t consume as much as the raw forwarding, they are necessary background tasks that keep devices online and coordinating. They contribute to the baseline power usage.

- Infrastructure upkeep operations: Telcos also have ancillary operational activities like cooling (HVAC in exchange buildings or base station huts) – cooling systems can be a sizable chunk of energy especially in old facilities. For example, older telco central offices might inefficiently cool a partially filled equipment room, adding to the power draw (and thus emissions).

- Network management and monitoring: Running NMS and OSS systems, while usually in data center environments, also contributes a bit – but that’s minor compared to the network’s direct power.

- Battery charging and conversion losses: Telecom networks often use DC power systems with battery backups. Keeping batteries trickle-charged and inverters/rectifiers running is another operational load (and if generators are tested or run, that can be a direct fuel emission, though that’s intermittent).

Does the geographic or network-path location of packet transmission significantly impact network energy consumption, and if so, how? (KR)¶

Yes, the geographic path a packet takes can significantly impact the energy consumed to deliver it:

- The longer the distance and the more network hops data must traverse, the more equipment is involved and the higher the total energy required.

Physical distance matters because, for example, sending data across continents might involve dozens of router hops and many thousands of kilometers of fiber.

- Along that journey, every optical line has periodic amplifiers/regenerators to maintain signal strength, each hop goes through a router or switch, and often multiple networks (autonomous systems) are involved. Each of those elements draws power.

- In contrast, delivering the same data from a nearby server over a short path uses far fewer resources.

- The network path matters in terms of intermediate technologies: sending data through a path with many optical-electrical-optical conversions (OEO)–e.g., traversing many legacy network nodes–will use more energy than a mostly optical express route.

- If a path goes through a power-hungry older core router vs. a newer efficient one, that also changes the footprint.

- Peering and routing policies on the Internet might send packets on suboptimal routes distance-wise. For instance, some traffic between two nearby countries might actually hairpin through a distant third country due to how networks interconnect, adding unnecessary path length.

- Those extra kilometers and router hops directly translate to extra joules burned.

- Another aspect is geographic location differences in energy source:

- While the packet’s energy need is determined by the equipment, the emissions from that energy depend on location too.

- A packet traveling through a node powered by renewable energy versus one powered by coal-heavy grid will have different associated emissions, even if energy consumed is the same.

- Some have proposed routing packets through regions with cleaner power (“green routing”)28 if possible. That’s a more advanced consideration, but it highlights that geography can affect the carbon impact, not just the energy.

Best Practices, Lessons Learned, and Optimization Opportunities¶

What strategies or practices do network operators (ISPs, telecoms, data centers) currently use to lower emissions and energy-related costs? (KR)¶

Network operators (ISPs, telecom companies, data center network managers) have been adopting a variety of strategies to reduce energy consumption and associated costs/emissions, including:

- Upgrading to more energy-efficient equipment

- As newer generations of routers, switches, and base stations are often designed to handle more throughput per watt, operators regularly refresh hardware to improve efficiency. This has historically yielded around 10% improvement per year in network energy efficiency just by technology advances29.

- For example, swapping out old DSLAMs for modern fiber OLTs, or replacing an older core router with a newer model that uses less power for the same traffic, can significantly cut power use. Operators often have sustainability or cost-driving refresh cycles where the power savings justify the CapEx.

- Decommissioning outdated equipment (removing unused legacy network gear that sits in racks still powered) has also yielded surprising savings in large networks. Network audits often find old switches or redundant links that can be turned off.

- Power management features:

- Many operators now use equipment that supports low-power idle states. Telecom operators encourage these features because millions of idle modems at full power translate to huge waste.

- A concrete case is the EU Code of Conduct on Energy Consumption of Broadband Equipment, which led vendors to introduce sleep modes for customer premises equipment and DSL line cards. This means, for instance, when a user is not actively using their home router or modem, the network can drop link rates or put parts of the circuit to sleep, reducing power draw.

- In mobile networks, carriers are starting to use features like turning off some carriers or sectors during low traffic hours (e.g., at night some base stations can go into a low-power state or disable unneeded transmit antennas).

- These dynamic energy-saving features are complex to implement (must avoid impacting connectivity), but they are increasingly part of network operations practices.

- Network optimization and traffic engineering:

- For backbone networks, operators can use techniques to consolidate traffic onto fewer links during off-peak times, so that other links or even entire routers can be shut down or put in standby.

- For example, if overnight traffic drops to 30%, one could reroute everything through a subset of nodes and turn off line cards on lightly used routes.

- Some operators have started to implement this in limited fashion – e.g., turning off one of parallel redundant links when not needed. SDN (Software-Defined Networking) controllers make it easier to reroute flows with an eye on energy, a practice some large providers are exploring in their core networks to throttle energy use during low demand.

- Infrastructure efficiency improvements:

- Telecom operators are investing in better cooling (even liquid cooling for baseband units in cellular towers) and power systems (high-efficiency rectifiers, DC power plants) to reduce overhead energy loss.

- If cooling can be made more efficient, the total energy per bit delivered goes down. Some telcos have reported trials of free cooling for central offices (using outside air) or consolidating equipment from many small sites into a more efficient large site.

- These practices mirror data center approaches (improving PUE), applied to distributed network sites.

- Renewable energy integration:

- While it doesn’t reduce energy consumption, it lowers emissions. Many operators install solar panels or wind at remote base station sites to offset grid use, and purchase renewable energy for their facilities.

- For example, powering a cell tower partly by solar cuts the diesel generator or grid draw, thereby cutting operational carbon (some emerging markets’ telcos did this primarily to reduce reliance on diesel fuel costs and secondarily for emissions).

- Monitoring and optimization programs:

- BT30, for instance, has offered energy and carbon footprint tracking tools for enterprise customers’ networking devices, which helps identify inefficiencies.

- On the provider side, continuous energy monitoring of network elements is becoming part of the NOC (Network Operations Center) dashboard.

- By having visibility into which devices consume how much power, operators can pinpoint hotspots of inefficiency and target them.

- Virtualization and network function virtualization (NFV):

- By virtualizing network functions (routers, firewalls, etc.) onto general-purpose servers, operators can potentially consolidate multiple network appliances into one physical server, which when highly utilized can be more energy-efficient than many under-utilized dedicated devices.

- This is done in telco clouds for things like IMS, EPC (core networks), etc. The fewer physical boxes doing the same work, the less total power (plus easier to manage cooling in one place).

- Demand-side management:

- Some ISPs encourage users to download large updates or perform cloud backups overnight when the network is underutilized, leveling the load.

- This doesn’t necessarily save energy, but it can avoid capacity over-provisioning and enable smoother adaptation of energy-saving modes.

How energy-efficient are Content Delivery Networks (CDNs), and how do their caching and data distribution practices affect overall efficiency? (KR)¶

We have seen that the longer the distance and the more network hops data must traverse, the more equipment is involved and the higher the total energy required. This is one reason Content Delivery Networks (CDNs) exist–by serving content from geographically closer nodes, we not only reduce latency but also save energy.

A CDN cache near the user means that the packet travels through, say, 3 routers instead of 15 to reach the origin, dramatically cutting the energy per bit delivered:

- Studies of Internet energy intensity have noted a huge range of energy per GB figures partly because of path differences–for example, a local exchange of data might consume on the order of 0.01 kWh/GB, whereas a transoceanic transfer could be 10× or more (especially if the path is not optimized)31.

- One case study (Schien et al. 2015)32 of a long-distance video call found around 0.2 kWh per GB consumed for the network transmission, factoring in all intermediate network devices. By contrast, delivering that data within the same city would involve far less consumption.

CDNs can also enable traffic off-peak shifting: for example, a CDN might pre-fetch or push content to edge servers during low-traffic hours (when energy grid is perhaps under less stress or more renewable). Then users fetch from the edge during peak. This can smooth network load and avoid firing up additional network devices at peak time – an indirect efficiency. This is essentially what Netflix does with their own middleboxes, as discussed in CS249I.

There are two caveats to consider, though:

- If content is very long-tail (infrequently accessed), a CDN might cache it and it never gets re-used before expiring, which was wasted effort and energy. CDN operators mitigate this with cache algorithms that evict truly unpopular content and by partitioning what they cache at edge vs. regional vs. central.

- CDNs add some duplicated infrastructure (many servers around the world), which has an embodied carbon cost and operational overhead. But from an operational emissions perspective, because they sit in well-managed data centers (often with good PUE and sometimes renewable energy), their impact tends to be efficient.

Overall, CDNs are quite energy-efficient in delivering data, and the net effect is usually positive. One study33 suggested a CDN can save on the order of 20-50% of energy per bit delivered compared to non-cached delivery, especially for content with high demand:

Finally, comparing network without CDN nor caches, to network with 50% of traffic served by CDN and with enabled caches, we save 23.12% of energy.

The key is to ensure the CDN is well-architected (so that it isn’t keeping too much cold data at the edge) and that the hit rate is high on popular items. When those conditions are met, CDNs not only improve performance but also make the Internet greener by eliminating millions of unnecessary long-distance data trips.

The overall efficiency of CDNs is evident in practice: without CDNs, the explosive growth of streaming and video traffic would have required a proportional growth in core network energy use: CDNs have helped absorb that growth in a more energy-efficient way.

What insights or best practices regarding energy efficiency can be derived from existing research such as Sarah McAllister’s "Project Kangaroo" at CMU, particularly related to data centers? (KR)¶

Sarah McAllister’s work (at CMU) on sustainable data centers–notably Project Kangaroo34–provides valuable insights, especially around the efficiency of caching and storage systems in data centers. Project Kangaroo is essentially a redesigned caching system for tiny objects that optimizes how data is stored across memory (DRAM) and flash storage.

The key insight from Kangaroo is that intelligently balancing different storage media can dramatically improve energy efficiency and hardware longevity in caches.

The subsequent system, FairyWREN35, explicitly tackled sustainability by targeting flash lifespan and embodied carbon. It introduced a new interface (WREN – Write-Read-Erase Interfaces) and caching strategies to reduce write amplification on flash. The result was a 12.5× reduction in writes to the flash devices compared to state-of-art caches.

This is significant because it extends the lifetime of SSDs in the data center. Longer-lived SSDs mean fewer replacements, which directly reduces embodied emissions (manufacturing of flash is carbon-intensive). In their results, this translated to about a 33% reduction in flash-related carbon emissions for the cache system, simply by using it more intelligently

While this is about embodied carbon primarily, it’s achieved through a software/algorithm improvement – a great example of how software can improve sustainability by making hardware last longer and operate more efficiently.

For our investigation:

- This underscores the importance of examining algorithms (like caching, scheduling, load balancing) for opportunities to eliminate waste.

- It also demonstrates that sustainability should be a cross-layer concern: McAllister’s work looked at application-level caching, OS/hardware interfaces, and device physics together.

- Similarly, we can’t treat network sustainability as just a hardware problem–it requires involvement from software (protocols, algorithms) to fully realize optimizations.

Which areas or layers within the overall network infrastructure present the greatest opportunities for sustainability optimization? (ZD)¶

Looking at the entire network stack–from physical infrastructure up to applications–certain areas stand out as particularly ripe for sustainability improvements:

- Access Networks (Last Mile and Customer Equipment):

- This is one of the biggest opportunities. The access network includes things like home routers/modems, fiber ONUs, cable nodes, DSLAMs, and cellular base stations. These are extremely numerous and often on 24/7. Improvements here have a large aggregate effect.

- For example, home gateways historically consume 5-10 W each: making them more efficient or adding sleep modes can save megawatts across a country. Even a few watts saved on millions of devices is significant.

- Likewise, in mobile, the Radio Access Network (RAN) is the dominant power sink. Optimizing this layer (through better hardware, or turning off underused cells, or more efficient antennas) offers tremendous savings.

- Core Network Routers and Switches:

- At the other end, the core IP network (including core routers, high-speed switches, optical transport equipment) is a critical area. As access speeds and traffic volumes increase, studies indicate the core routers will dominate power consumption at high data rates if not improved. These large routers are complex, but improving their energy per bit (through better chip design, cooling, or more adaptive operation) can yield big absolute savings because each device consumes so much power.

- There’s also an opportunity to implement energy-aware routing/scheduling in this layer: since core networks typically have redundant paths, selectively powering down some links during low demand can save energy. The core is fewer devices (compared to access), but each optimization (like a 10% efficiency gain) can save kilowatts per device. Moreover, as core capacity grows, ensuring energy scales sub-linearly (so we don’t need double the power for double the traffic) is key to sustainable growth.

- Transmission/Physical Layer:

- The physical layer is often where a lot of static power is drawn (keeping lasers biased, keeping clock circuits running), so advancements that enable turning those off when not needed (even microsecond-scale on/off) are impactful.

- Improvements in optical transport and link technologies offer opportunities. Long-haul fiber links are already quite efficient (WDM optical systems can carry huge capacity with relatively low power), but as we push towards petabit scales, even small improvements in transceiver efficiency multiply out.

- the introduction of techniques like adaptive link rate (ALR) on Ethernet, or more aggressive use of low-power states in optical transponders, can reduce idle consumption. The copper Ethernet PHY layer has an IEEE Energy Efficient Ethernet standard (802.3az) – implementing that widely in LAN switches could save a lot at the enterprise LAN layer (it allows links to go to a low-power idle when no traffic).

- Network Architecture and Protocol Layer:

- Deploying more caching (CDNs) at the edge reduces load on core links significantly, as discussed, thereby reducing energy in the core. So the content distribution layer is an opportunity: expanding CDNs, optimizing where content is served from (maybe serving from the most energy-efficient data center available) can cut overall energy.

- Current protocols don’t factor energy into routing decisions, but there’s an opportunity to incorporate energy metrics. An area of research is to route traffic not just based on shortest path, but also based on which path is more energy-efficient (perhaps a slightly longer path that goes through a highly efficient router could be better than a short path through an old router – this is a trade-off scenario).

- Virtualization/network slicing can allow multiple networks to share the same physical hardware, raising utilization and allowing other hardware to be switched off–targeting the network virtualization layer is another opportunity.

Given that operational efficiency typically increases with scale (e.g., hyperscale data centers), yet reducing data transmission distance can significantly decrease network emissions (as seen in edge computing and local POP caching), what opportunities exist for optimizing the geographic distribution of network infrastructure to balance these trade-offs and minimize total emissions? (ZD, TC)¶

Balancing the trade-off between centralized hyperscale data centers and decentralized edge deployments is critical for minimizing total emissions in network infrastructure:

- Hyperscale facilities excel in energy efficiency (PUE of ~1.1–1.3) due to optimized cooling and high server utilization (40–60%), achieving low energy usage (~20 µJ/bit total including overhead).

- In contrast, edge nodes typically operate at higher PUE (~1.5–2.0), lower utilization (10–30%), and thus can be less efficient per unit of compute delivered.

- However, edge deployments substantially reduce energy associated with network transmission—particularly relevant when data travels significant distances.

- For example, serving data locally with fewer router hops (2–3 hops) can significantly lower network energy compared to distant data centers (11+ hops).

The environmental tipping point—where edge becomes more sustainable than hyperscale—depends primarily on geographic distance, workload intensity, and technology choice:

- Specifically, edge computing is environmentally beneficial when handling substantial local traffic, thereby maximizing utilization and minimizing idle time.

- Conversely, low-utilization edge sites can become inefficient, exceeding cloud emissions if active less than 10% of the time. Technology also matters: edge nodes on fiber connections are highly energy-efficient, whereas wireless edge deployments (e.g., 4G/LTE) can negate these gains due to higher access network energy use.

Grid carbon intensity and climate considerations further influence optimal infrastructure placement:

- Hyperscale facilities situated in regions with abundant renewable energy (such as the Pacific Northwest or Northern Europe) significantly reduce overall emissions. For instance, Netflix leveraged carbon-neutral AWS regions to substantially reduce its cloud emissions.

- In hotter climates, small edge facilities with less advanced cooling can incur higher overhead. Utilizing carbon-aware scheduling—dynamically routing traffic based on regional grid carbon intensity—has demonstrated substantial emissions reductions (49–68%) without impacting latency significantly .

Strategically, hybrid architectures leveraging hyperscale efficiency for general compute and edge deployments for latency-sensitive or high-volume local data are optimal:

- Techniques like workload consolidation, CDN caching, and modular edge infrastructure that scales dynamically with demand ensure maximum utilization and minimize idle inefficiency.

- Integrating advanced cooling at the edge and carbon-aware load management are essential for sustainably balancing efficiency and proximity, ensuring infrastructure is both environmentally and operationally optimal.

If the energy consumption of Internet networking were significantly optimized, what measurable environmental impacts could we expect? (ZD)¶

As we have seen, mobile and fixed networks alone were estimated to account for 1-1.5% of global electricity use in 2022. Threfore, improvements in network efficiency can make a dent in global energy use. Here are some impacts we could expect:

- Reduction in CO₂ Emissions: Electricity savings directly translate to lower CO₂ emissions, unless that electricity is already 100% renewable. For a ballpark figure, suppose global Internet network infrastructure uses on the order of a couple hundred terawatt-hours (TWh) per year (a rough estimate for telecom networks worldwide). If optimizations cut this by, say, 50%, that’s on the order of 100 TWh saved annually. Using an average grid emission factor (~0.5 kg CO₂ per kWh, though it varies), this would avoid about 50 million metric tons of CO₂ per year. That’s equivalent to the annual emissions of several million cars being removed from the road or a dozen mid-sized coal power plants being shut down. In terms of percentage, ICT’s overall carbon footprint is often cited around 2-3% of global emissions; a major networking efficiency gain could shave a fraction of a percent off global emissions – which is a big deal in climate terms, where every tenth of a percent is hard fought. Moreover, as other sectors decarbonize, ICT’s share would grow, so making networks efficient keeps ICT’s footprint in check.

- Electricity Demand and Grid Load: From an energy infrastructure perspective, reducing network power use eases demand on the grid. For instance, telecom operators in some countries are among the larger electricity consumers; cutting their consumption frees up capacity and can reduce the need for additional power generation (especially at peak times). This in turn can reduce the use of peaker plants (often fossil-fueled) and improve overall grid efficiency. If networks are optimized to be more energy-proportional, their demand during off-peak times will drop, potentially smoothing grid load profiles.

- Less Waste Heat: Every watt consumed by networking gear becomes heat that needs to be dissipated. So cutting network energy use also reduces waste heat output. In urban environments, telecom equipment in exchanges adds to building heat; reducing that could marginally lower urban cooling loads in aggregate. It’s a secondary effect but still positive (especially if an exchange or data center is near capacity for cooling, this could alleviate that).

- Extended Lifespan of Equipment and Less e-Waste: Interestingly, some optimizations (like reducing device utilization or using sleep modes) can prolong the life of equipment. For example, cooler, less stressed devices fail less often. If core routers can run cooler thanks to lower utilization or intelligent cooling, they might have a longer useful life, meaning replacement cycles can be extended. That in turn means less e-waste and less frequent manufacturing of new equipment (which is a source of embodied emissions). Similarly, if batteries at base stations aren’t cycled as hard because the load is lower, they last longer (reducing battery waste). So in a roundabout way, operational efficiency can yield material and lifecycle environmental benefits.

- Contribution to Industry Climate Goals: Many telecom and tech companies have climate pledges (e.g., carbon neutrality by 2030 for operations). Achieving those often hinges on reducing energy use and then supplying the remainder with renewables. Significant network optimization makes those goals more attainable by shrinking the energy footprint that needs to be offset or cleaned. So, we’d see more companies in the ICT sector hitting their targets, which contributes to overall climate commitments globally (like the Paris Agreement targets).

- Quantifiable benchmarks improvement: We would expect to see improvements in metrics like energy per bit for the Internet. For instance, today (just illustrative), delivering 1 GB over the Internet might consume say 0.1 kWh of energy on average (hypothetical). If optimization cuts that to 0.01 kWh/GB, then the carbon per GB (if on fossil power) drops by the same factor. This means the future growth of Internet traffic could occur with minimal increase in energy – a kind of “decoupling” of data growth from energy growth. Environmentally, that’s crucial: we could handle, say, 10× more traffic in the future with even less total energy than we use now, if efficiency gains outpace traffic growth. That yields a scenario where the Internet’s environmental impact stabilizes or even decreases despite growth, which is a very positive outcome.

Complementary Perspectives and Contextual Considerations¶

What can we learn from sustainability optimization in closed enterprise networks (LANs, private networks) that could be applied to broader Internet networks? (TC)¶

Closed enterprise networks – like corporate LANs or campus networks – often serve as microcosms where innovative energy-saving techniques can be tried, and many of those lessons can extend to larger public networks:

- For example, in an office LAN, IT staff might power down certain network elements (like floors of switches or Wi-Fi access points) after business hours when nobody is around:

- Enterprises have the advantage of knowing usage patterns (e.g., 9am-5pm offices) and can schedule equipment to enter low-power states accordingly.

- This idea directly inspires broader Internet strategies: if parts of a telco or ISP network consistently see low traffic at night, why not put those on “sleep” much like an enterprise does with office APs?

- The challenge is greater (Internet traffic can’t be totally quiet at night, and there are always some users), but the principle stands: idle capacity = opportunity to save power, if you can redistribute load.

- Enterprises have shown that even turning off half the access points at night in a building (while maintaining coverage) saves energy with negligible impact. ISPs could similarly turn off selective small cells or use fewer frequencies at off-peak times.

- Another lesson from enterprise networks is the use of Energy Efficient Ethernet (EEE) on LAN links and adaptive link rates:

- Many modern office switches and NICs support 802.3az, dropping link speed or entering low-power idle when traffic is sparse. In a single LAN, the savings might be a few watts per link, but across thousands of links that’s meaningful.

- This concept can apply Internet-wide: for example, inter-router links in backbone networks could use adaptive rate: if a link’s utilization is 5%, perhaps it could slow from 100 Gbps to 10 Gbps during that period to save power (if hardware supports it). The coordination required is higher, but the enterprise case proves viability in principle.

- Network monitoring and granular control in enterprises also provides a model:

- Enterprises often have detailed power monitoring of their on-prem equipment (some smart power strips can turn off devices on schedule, etc.). Extending that, ISPs now deploy smart meters on their base station sites and use software to remotely manage power.

- An enterprise might automatically shut down a lab’s network equipment when not in use; similarly an ISP could remotely shut down, say, a spare router in a point-of-presence that currently isn’t carrying traffic. The control frameworks are analogous, just at larger scale.

- We’ve also seen enterprises consolidate and virtualize network functions (e.g., running multiple services on one appliance):

- That’s a precursor to telco NFV where the same idea is used network-wide.

- If an enterprise can run a firewall, a router, and a DHCP server on one physical box instead of three, an ISP can run multiple virtual routers on one chassis in the core instead of many half-utilized boxes. So the consolidation lesson is directly applicable.

- Another enterprise innovation is Power over Ethernet (PoE) management–e.g., using PoE controllers to cut power to devices like IP phones after hours. In broader networks, we don’t exactly cut power to users, but the idea of centralized power control is analogous to using SDN or centralized controllers to manage device states across the network.

- Enterprise networks also often leverage policy-based routing to direct traffic in certain ways for performance or cost; one can envision policy-based routing for energy–e.g., an enterprise might route internal traffic through a specific low-power firewall at night. Similarly, an ISP could route traffic via a path that goes through “green” (low-energy or renewable-powered) nodes when possible.

Are there effective sustainability strategies applied to user devices (multi-core architecture) and data centers (e.g., demand shaping and shifting) that could be adapted to optimize networks? (TC)¶

From user devices:

- Multi-core architecture and power management:

- Modern CPUs and smartphones use heterogeneous multi-core designs–e.g., a set of high-power cores and a set of low-power cores (big.LITTLE architecture). The system schedules heavy tasks on the big cores and background/light tasks on little cores to save energy.

- The principle here is energy proportional computing: use just enough hardware to meet current demand.

- In networking, we can imagine a similar approach: for instance, a router could have multiple forwarding engines or processing pipelines, some optimized for high throughput and others for low-power operation. When traffic is light, it could route packets through a low-power pipeline (analogous to a little core) and shut down the heavy-duty ASICs, and when traffic ramps up, bring the big ones online.

- Some routers today have modular line cards–potentially one could disable entire line cards when ports aren’t needed, similar to parking cores in a CPU. Also, just as CPUs dynamically change frequency (DVFS – dynamic voltage and frequency scaling) based on load, network devices could adjust clock speeds of their switching ASICs under lower load to save power (some high-end switches do have lower clock modes).

- So, dynamic scaling at the hardware level in network gear is directly analogous to CPU power scaling.

- Sleep states:

- User devices also aggressively use sleep states – e.g., a phone’s radio sleeps between data bursts (Discontinuous Reception in LTE/5G) to save battery.

- Networks can emulate this by having micro-sleeps on links (Energy Efficient Ethernet does something like this by turning off the transmitter for microseconds when idle). Even routing protocols could be designed to allow short naps of interface when no data is flowing.

From data centers:

- Demand response:

- Data centers sometimes delay certain batch jobs or shift them to different times (or locations) to coincide with lower power prices or higher renewable energy availability. This is often called workload scheduling for efficiency.

- A similar idea in networking is traffic shaping for energy: if certain traffic isn’t urgent, the network could schedule it to times when the network is underutilized (and maybe when electricity is cleaner). For example, large software updates or backups could be delayed to nighttime when the network can carry them with minimal impact and when otherwise devices might be idle (so we avoid keeping them busy in peak hours).

- This flattens the network demand curve, allowing network devices to operate more steadily and possibly enabling longer idle periods (during which some components can turn off). One could imagine ISPs offering “energy-efficient delivery” options for content providers: non-time-sensitive data gets delivered when it’s greenest to do so.

- Geographical load shifting:

- Another practice in data centers is to move compute tasks to data center sites where energy is currently plentiful/cheap (e.g., from an area in nighttime to one in daytime solar surplus).

- In networking, we can’t move where the user is, but we can sometimes choose different network paths. One could route data through regions where the grid is currently greener (if multiple paths exist). This is akin to moving workload to a cleaner power location.

- For instance, if a packet can go through either a route in Region A (coal-heavy power at the moment) or Region B (renewable-heavy at the moment), an SDN controller could choose Route B to lower instantaneous carbon emissions. -This concept has been floated as “carbon-aware routing”–akin to data center carbon-aware scheduling.

- Demand shifting:

- Demand shifting also connects to ideas like time-of-use routing. If an ISP is charged variable electricity rates, it might try to schedule heavy data moves at cheaper rate times, indirectly aligning with when the grid might be greener (often off-peak night).

- This is essentially aligning network usage patterns with energy availability, much like data centers doing non-urgent compute when renewable energy is high (some companies delay Bitcoin mining or water pumping until midday solar, for example).

- Resource virtualization and consolidation:

- Data centers also pack VMs onto fewer servers during low load so other servers can be turned off (or put in deep sleep).

- Networks can do the analogous thing: consolidate traffic on fewer links or devices so that others can be shut off.

- For example, instead of having 4 parallel fibers lightly used, concentrate traffic on 2 and shut the other 2 off at night. That’s effectively consolidating workloads onto fewer “active” network elements.

- Telemetry:

- In data centers, there is an emphasis on measurement and feedback – using telemetry of power to inform workload placement.

- Networks could similarly use telemetry: measure in real-time which routers are running hot (power-wise) and see if load can be shifted away from them or if they can be throttled. Essentially, closed-loop control: data centers do this by moving VMs, networks could do it by rerouting traffic.

How do emerging networking technologies such as Software-Defined Networking (SDN), 5G infrastructure, and satellite-based networks affect overall energy consumption and emissions? Could these technologies help reduce Internet networking emissions through increased efficiency, or are they likely to increase total emissions due to higher usage and infrastructure demands? (TC)¶

Software-Defined Networking (SDN):

- SDN by itself is a network management paradigm – its impact on energy comes from how it’s used. SDN centralizes control, allowing more flexible management of traffic. This can help reduce energy consumption if leveraged for that purpose. For example, an SDN controller can more easily implement energy-aware routing: dynamically turning off certain links or nodes and rerouting traffic through others during low-demand periods, something that’s hard with traditional distributed protocols.

- SDN can also facilitate network virtualization, meaning you can run multiple virtual networks on the same physical infrastructure, increasing utilization and avoiding duplicate hardware. By enabling such consolidation, SDN can reduce the total number of devices active. One concrete advantage is the ability to program switches to do only what’s needed – e.g., if a particular switch isn’t needed for some flows, an SDN controller can idle it, whereas traditionally it might still be doing some default forwarding.

- In essence, SDN is an enabler for energy efficiency – it provides the fine-grained control and global visibility necessary to implement many of the power-saving strategies we’ve discussed (like sleeping links, adaptive topology). Without SDN, an ISP might hesitate to turn off a link, but with a controller that can instantly reroute traffic, it becomes feasible.

- On the other hand, SDN doesn’t magically make hardware more efficient; also, SDN controllers themselves are servers that consume some power (though relatively negligible compared to the network devices). So SDN’s net effect on emissions depends on usage: used smartly, it reduces emissions by optimizing the network.

- There’s little downside except complexity – SDN could slightly increase load on control-plane networks, but that’s minor. So, likely SDN will help reduce networking emissions by unlocking advanced energy management techniques.

5G Infrastructure:

- On a per-bit basis, 5G is designed to be much more efficient than 4G. Techniques like massive MIMO (which improves spectral and energy efficiency), beamforming (sending radio energy more directly where needed), and higher bandwidths mean energy per bit can be lower. Also, 5G standards incorporate more aggressive sleep modes for base stations – for instance, turning off some channels during low traffic and faster wake-ups. There’s something called lean carrier design in 5G: unlike 4G which constantly broadcasts control signals, 5G can shut off some signals when not in use, reducing idle energy. All that suggests that to deliver a given amount of data, 5G will use fewer Joules than previous technologies.

- However, 5G also entails more infrastructure: it uses higher frequency bands (like mmWave) which have short range, requiring many small cells to blanket an area. Even the “traditional” sub-6 GHz 5G will push operators to densify networks to achieve capacity targets. More cell sites = more total power, even if each is more efficient than an equivalent 4G site. Additionally, 5G aims to connect many new devices (IoT, etc.) and encourages data-heavy applications (4K video, AR/VR). This likely increases total data traffic significantly – and historically, when capacity increases, usage tends to fill it (the “rebound effect”). Operators have reported that initial 5G rollouts are causing double-digit percentage increases in network energy consumption because they are running 5G in addition to 4G (often co-located)

- Over time, as 5G replaces 4G and if older networks are retired, the efficiency gains should be realized. The critical point is whether the efficiency per bit outpaces the growth in bits. If 5G results in say 10× the traffic but is only 5× more efficient, net energy use still goes up ~2×. On the flip side, if managed well, 5G could handle that 10× traffic for maybe the same energy as 4G needed for much less – especially with features like AI-driven sleep modes adjusting sectors in real time (an area of active development).

Satellite-based Networks:

- Systems like SpaceX’s Starlink, OneWeb, etc., introduce a new layer of infrastructure: hundreds to thousands of satellites in low Earth orbit (LEO) plus ground stations and user terminals.

- In terms of operational energy, satellites themselves are solar-powered in space (so one could say their operation uses renewable energy and doesn’t draw from grid, aside from the fact that manufacturing and launch had huge embodied emissions).

- But the user side of satellite internet is quite energy-intensive. The user terminals (satellite dishes) track satellites and use phased array antennas that consume a significant amount of power (a Starlink user terminal draws on the order of 50-100 W continuously). That’s much higher than a typical fiber or DSL modem (which might draw 5-10 W). If satellite internet scaled to tens of millions of users, the power consumed by all those dishes could be substantial.

- Also, the ground stations that connect the satellite constellation to the terrestrial Internet use high-power RF equipment to talk to satellites and fiber links to backhaul data – those are similar to a cell tower in power footprint.

- So, adding satellites effectively adds a parallel network rather than replacing existing ones, likely increasing overall energy usage. Satellites do not offload much from ground networks except in areas where ground networks don’t exist (remote regions).

- In urban areas, satellites are less efficient than terrestrial networks for connectivity. So if people use satellites in cities, that’s an inefficient usage (hopefully they won’t, as it’s targeted to rural).

- Another point: satellites have higher latency and limited bandwidth, which might constrain some high-data uses, but as technology improves, usage might grow.

- We should also consider that launching rockets for satellites is extremely carbon-intensive (though that’s an embodied emission, not operational). Frequent satellite replenishment (LEOs have ~5-year lifespans) means an ongoing emissions impact beyond power consumption.

- Could satellites help reduce emissions? Possibly in edge cases: for instance, if satellites enable a lot of data to bypass multiple inefficient terrestrial hops, there might be a few cases of savings.

- But generally, terrestrial fiber is so efficient that bouncing data through space is inherently less energy-efficient per bit (radio at long distance vs fiber). Satellite networks are mostly about coverage, not efficiency.

Which foundational concepts from computer science and electrical engineering (such as energy proportionality, static power draw, power management algorithms) are most relevant to guiding our network sustainability investigation? (TC)¶

Several core computer science (CS) and electrical engineering (EE) concepts form the theoretical backbone for understanding and improving network energy efficiency:

- Energy Proportionality: This concept, originating from computer architecture (Barroso and Hölzle for servers), states that ideally a system’s energy use should scale linearly with the work it’s doing. If it’s doing nothing, it should use almost no energy; if it’s at 50% load, use ~50% of max energy, etc. In networks, current devices are far from energy-proportional – they draw a high constant power regardless of load. Striving for energy proportionality in routers and switches is a guiding principle. It means designing hardware and protocols so that idle or low-utilization periods consume substantially less power. This concept leads us to explore idle logic, sleep modes, dynamic scaling, etc., all aimed at proportional energy use. Energy proportionality is almost a yardstick for our progress: a fully optimized network would be energy-proportional (or even better, power down to zero when not in use).

- Static vs Dynamic Power Draw (and Power Management): In EE, especially for CMOS circuits, total power = static (leakage, baseline) + dynamic (switching dependent on activity). Networking equipment has significant static power – e.g., just keeping circuits biased, clocked, and ready contributes a baseline consumption. Recognizing this, we look at methods to reduce static draw: power gating (turning off power to parts of a chip when not needed), and reducing leakage (via process tech or lower voltages). On the dynamic side, dynamic power scaling (DVFS) can lower voltage/frequency on network chipsets during low demand. These are standard EE techniques used in CPUs that are directly relevant to network ASICs. Many network chips historically ran at fixed frequency/voltage for determinism; updating that mindset is a challenge worth pursuing.

- Queuing Theory and Traffic Bursts: From CS/networking theory, how traffic is distributed in time affects energy use. If packets arrive in a burst and then a link is idle, one could shut the link during idle. This ties to coalescing and batch processing concepts – similar to OS batching disk I/O for energy efficiency. Understanding the queueing behavior (like Poisson vs bursty traffic) helps in devising algorithms that can create gaps for sleeping. Concepts like duty cycle and sleep scheduling in communications (used in sensor networks heavily) are relevant – essentially a form of queuing where you queue until you have enough to justify waking up, trading off latency.

- Algorithmic Complexity vs. Energy: Many CS algorithms can be optimized for energy by reducing computation or memory access. In networking, routing algorithms that are simpler or execute less often can save CPU cycles on route processors (though that’s minor compared to data plane energy). However, consider encryption: a more energy-efficient encryption algorithm (perhaps one with hardware acceleration) could save energy. So understanding computational efficiency (Ops per Joule) is relevant. Also, approximation algorithms – maybe we don’t always need maximum performance; an approximate solution could be more energy-saving. This concept can be applied e.g. in network routing decisions that trade a bit of optimality for lower overhead.

- Control Theory and Feedback Systems: Maintaining performance while saving energy might require adaptive control. Concepts like PID controllers or modern machine learning controllers to manage power states could come from control theory. Also, stability is an issue: turning devices on/off can cause oscillations if not controlled – a control theory approach ensures stable operation.

- Electrical Power Engineering basics: Knowing about AC/DC conversion efficiency, power factor, etc., is surprisingly relevant. A network facility might waste energy in conversions from AC mains to 48V DC for telecom equipment. Minimizing conversion steps or using higher efficiency converters (concepts from power electronics) can reduce losses. Also battery chemistry (for backup) – not directly network operation, but if we optimize how often batteries are used (float vs deep discharge), we can prolong life (less replacement emissions).

- Information Theory (trade-offs between power and capacity): In wireless especially, Shannon’s theory relates signal power, bandwidth, and capacity. To save energy, one might trade bandwidth for power: for example, using a wider band at lower power vs narrower band at higher power. Understanding those trade-offs (bit energy versus spectral efficiency) guides strategies like using lower modulation orders when possible to use less power. Also coding: stronger error correction codes can reduce retransmissions at cost of a bit more processing.

- Parallelism vs Serialism: As mentioned, turning off parallel resources and using one at higher utilization might save energy if the fixed cost of each resource is high (this is an aspect of Amdahl’s law vs Gustafson’s law in a way – distributing load vs concentrating it). We apply that concept when deciding how many links to power on, or how many router line cards to use.

- Hardware-Software Co-design: A concept in computer engineering where hardware and software are designed together for efficiency. In networks, this suggests we look at protocol design with hardware constraints in mind (like McAllister’s work did for caching). It’s not a single concept, but a methodology that’s very relevant for sustainability: e.g., design a routing protocol that allows hardware to shut down parts (OSPF might not be designed with that in mind).

- Telecommunications engineering fundamentals: Concepts like link budget and power control – in cellular networks, dynamically adjusting transmit power to the minimum needed for link quality saves energy and reduces interference. 5G employs power control algorithms rooted in these fundamentals.

- Energy Harvesting and storage (in context of networks): Not a classical concept for core networks, but in sensor networks, nodes can harvest energy. Similar thinking could be applied e.g. to remote telecom towers with solar panels (optimize usage to match harvest cycles).

- Moore’s Law and Dennard Scaling: Historically, chips got faster and more energy-efficient per transistor. While Dennard scaling (power scaling with transistor size) has slowed, new technologies (like specialized ASICs, FPGAs, or even optical switching) might bring leaps in efficiency. Understanding those trends helps gauge future potential improvements.

Summary¶

- High Baseline Power Use: Networking devices consume a high base level of power even when traffic is low, adding only a small increment as traffic increases. This means much of their energy draw is a fixed overhead rather than proportional to the data throughput. Inefficiencies are compounded when devices perform unnecessary work (such as redundant retransmissions or idle listening) or remain at full power during periods of light traffic. These issues highlight the need for hardware that can throttle power when idle and for network management that consolidates data flows onto fewer devices (allowing unused equipment to be turned off).

- Overprovisioning Waste: Telecom networks are built to handle peak traffic loads, resulting in significant overprovisioning. During off-peak hours, much of that capacity sits idle but still draws power. Thus, operating such infrastructure continuously wastes energy during low-demand periods.

- Metrics and Carbon Footprint: Sustainability metrics for networks gauge energy use relative to the service delivered (e.g., bits transferred or users served per Joule). These measurements are increasingly tied to carbon emissions. Industry groups like the IETF (Internet Engineering Task Force) are establishing standards to report such metrics. For example, a 2024 IETF draft proposes instrumentation to track network power usage, energy efficiency (bits/Joule), and carbon footprint.

- Impact of Data Path: Where and how far data travels within a network has a direct impact on energy consumption. Optimizing routes and keeping traffic local when possible can reduce overall energy use.